Meta is updating the way it delivers advertisements to users in order to foster a more positive experience. The changes can be divided into two parts: one will restrict how companies that target teenage users while the other aims to make things more “equitable” and less discriminatory.

It appears Meta is forcing companies to generalize more with their ads instead of honing in on a specific group. Starting February, advertisers will no longer be able to target teens based on their gender on either Facebook or Instagram. Advertisers will only be able to use a user's age and location as their metric. This tightening of the rules follows a similar update from 2021 that restricted advertisers from targeting underaged users based on their interests and activities on other apps.

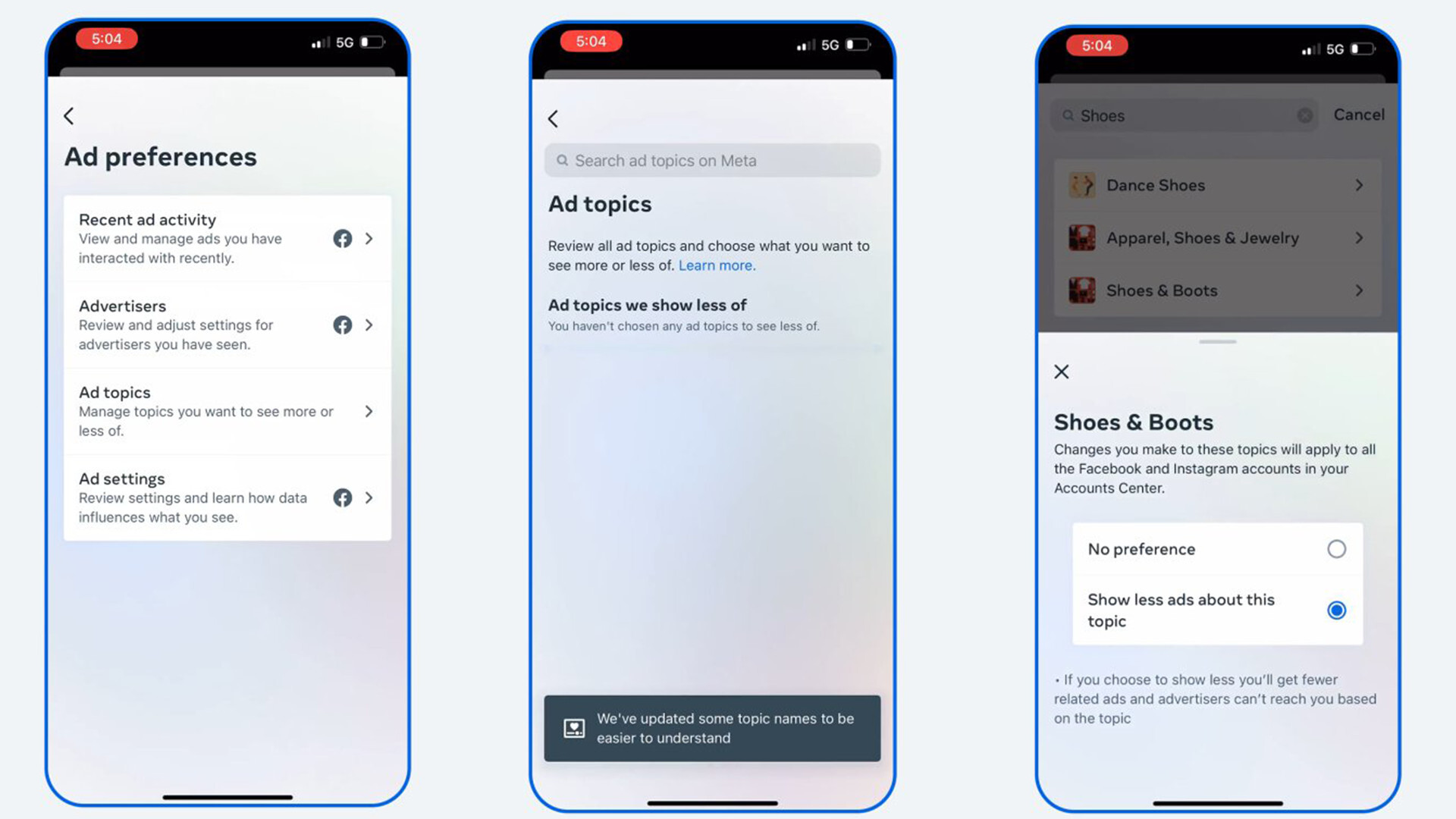

And in March, teens will be given more tools in Ad Topic Controls to “manage the types of ads they see on Facebook and Instagram.” It doesn’t look like they’ll be able to stop seeing ads altogether. Like or not, teens will continue to encounter them. But at the very least, they can go into Ad Preferences on either app and choose See Less to minimize the amount of commercials seen.

Fighting discrimination

The second update will focus on Meta's new Variance Reduction System (VRS) to create a more “equitable distribution of ads” on its platforms; namely those that relate to housing, employment, and credit in the US.

VRS comes after the company settled a lawsuit with the United States Department of Justice (DOJ) over allegations that it “engaged in discriminatory advertising in violation of the Fair Housing Act (FHA).” Apparently, Meta would allow advertisers to not show ads to certain groups of people “based on their race, color, religion, and sex," among other metrics.

The technology behind VRS is said to use a new form of machine learning to serve advertisements that “more closely reflect the eligible target audience.” According to Meta, the system works by first sending out housing ads to a wide array of people. From there, it will measure the “aggregate age, gender, and estimated race/ethnicity distribution” of the people who encountered said ad.

VRS will then compare its findings to the measurements of people that are “more broadly eligible to see the ad.” If it detects any discrepancies, the system will adjust itself to be more fair so that people aren't excluded.

Privacy in mind

Privacy is of great important for VRS. The measurements made by the system will include “differential privacy noise” to stop the system from learning and retaining that information so it won’t act on specific information. It also won’t have access to people’s actual age, gender, or race as the data are all estimations.

The DOJ seems to be pretty happy with these changes. U.S. attorney Damian Willaims said the DOJ appreciates Meta working with the government and “taking the first steps towards addressing algorithmic bias.”

Currently, VRS only works with housing ads in the United States, but there are plans to expand into both employment and credit ads later this year. We asked the company if all of these changes are exclusive to the US or will they roll out globally. This story will be updated if we hear back.

While Meta bettering its ad policy is a good thing, some of us prefer having none. If you’re one of those people, be sure to check out TechRadar’s best ad blockers list for 2023.

No comments:

Post a Comment